About Me

I'm a student at the University of Oxford, working with Haggai Maron, Michael Bronstein, and Yarin Gal. My research is focused on making language models more adaptable and reliable, closing the gap between what LLMs can do in principle and what they consistently do in deployment. To that end, I work on post-training adaptation, long-context language modeling, misbehavior detection/monitoring, and data efficient architecture design. A recurring theme in my work is exploiting structure in neural parameter spaces and decoding model behavior from weights and activations.

In 2025, I co-organized the first Weight Space Learning Workshop @ ICLR and spent the summer at Sakana AI, studying positional embeddings and length generalization in LLMs. Before Oxford, I completed my undergraduate studies at the Technion as a Rothschild scholar, studied robustness of expander graphs at the Weizmann Institute, developed fair learning algorithms at Fairgen, and taught math and programming at the ARDC. When I can find the time, I love baking 🍞, kitesurfing 🪁, and snowboarding 🏂️.

Selected Publications

* denotes equal contribution; full list on Google Scholar

Extending the Context of Pretrained LLMs by Dropping Their Positional Embeddings

, Koshi Eguchi, Takuya Akiva, Edoardo Cetin

ICLR 2026

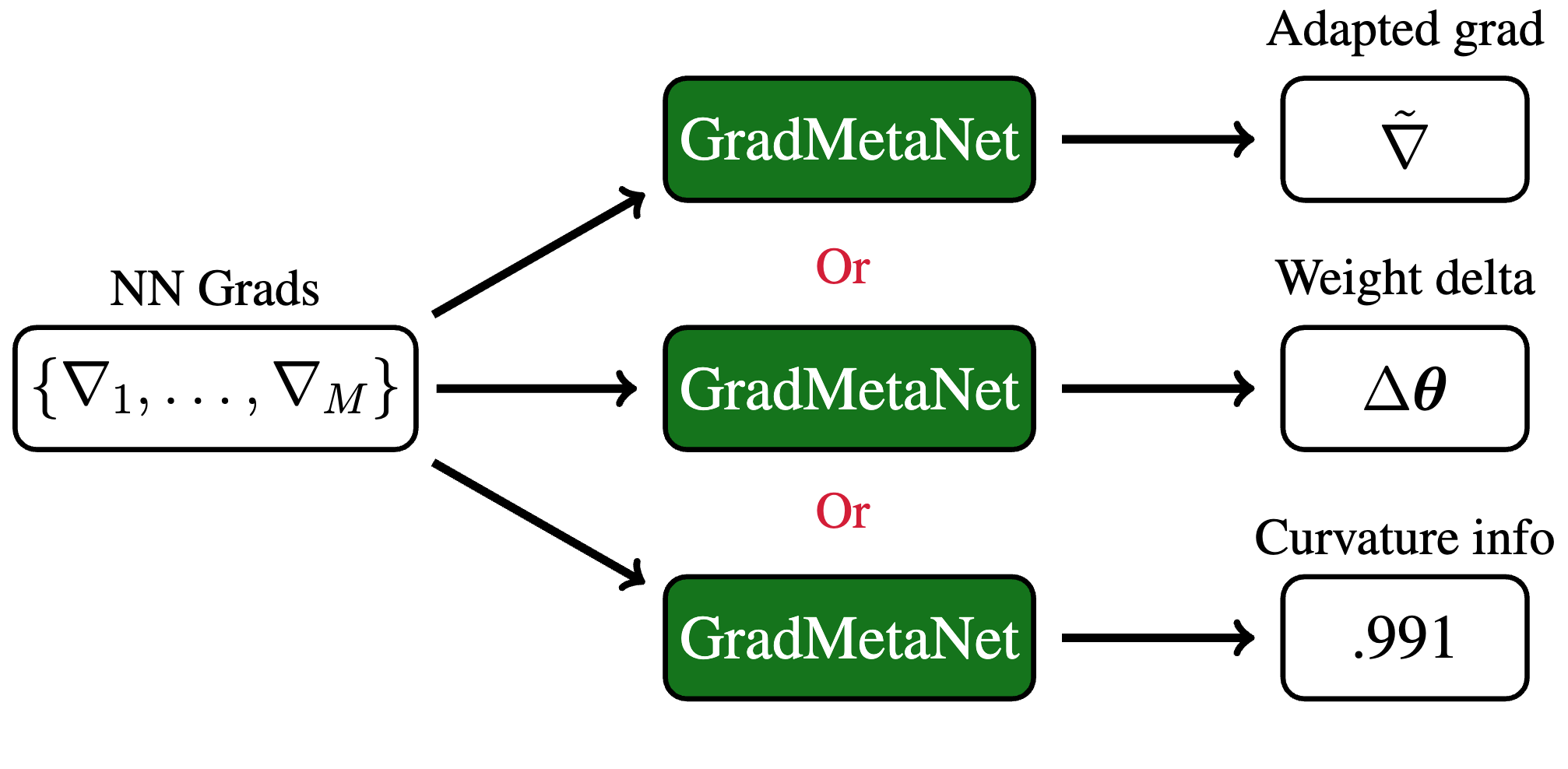

GradMetaNet: An Equivariant Architecture for Learning on Gradients

*, Yam Eitan*, Aviv Navon, Aviv Shamsian, Theo (Moe) Putterman, Michael Bronstein, Haggai Maron

NeurIPS 2025

Weight Space Learning Workshop @ ICLR 2025

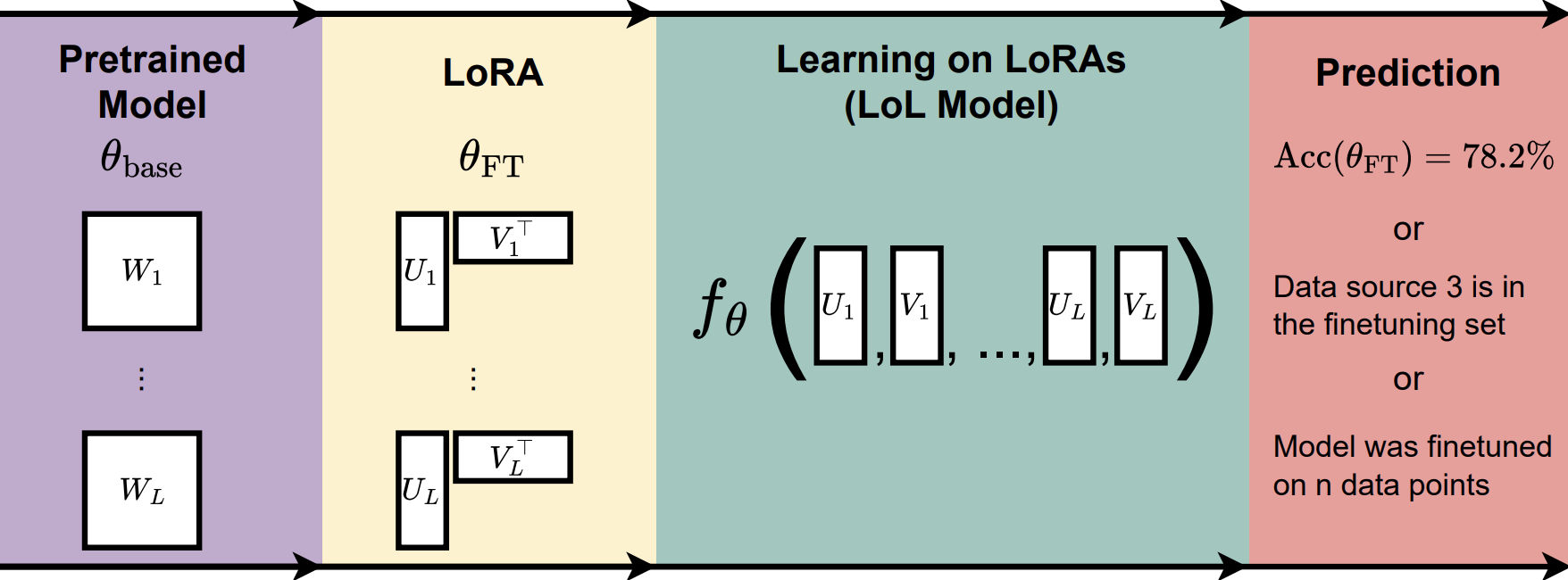

Leaning on LoRAs: GL-Equivariant Processing of Low-Rank Weight Spaces for Large Finetuned Models

Theo (Moe) Putterman*, Derek Lim*, , Stephanie Jegelka, Haggai Maron

LoG 2025, Oral Presentation 🎤

Weight Space Learning Workshop @ ICLR 2025

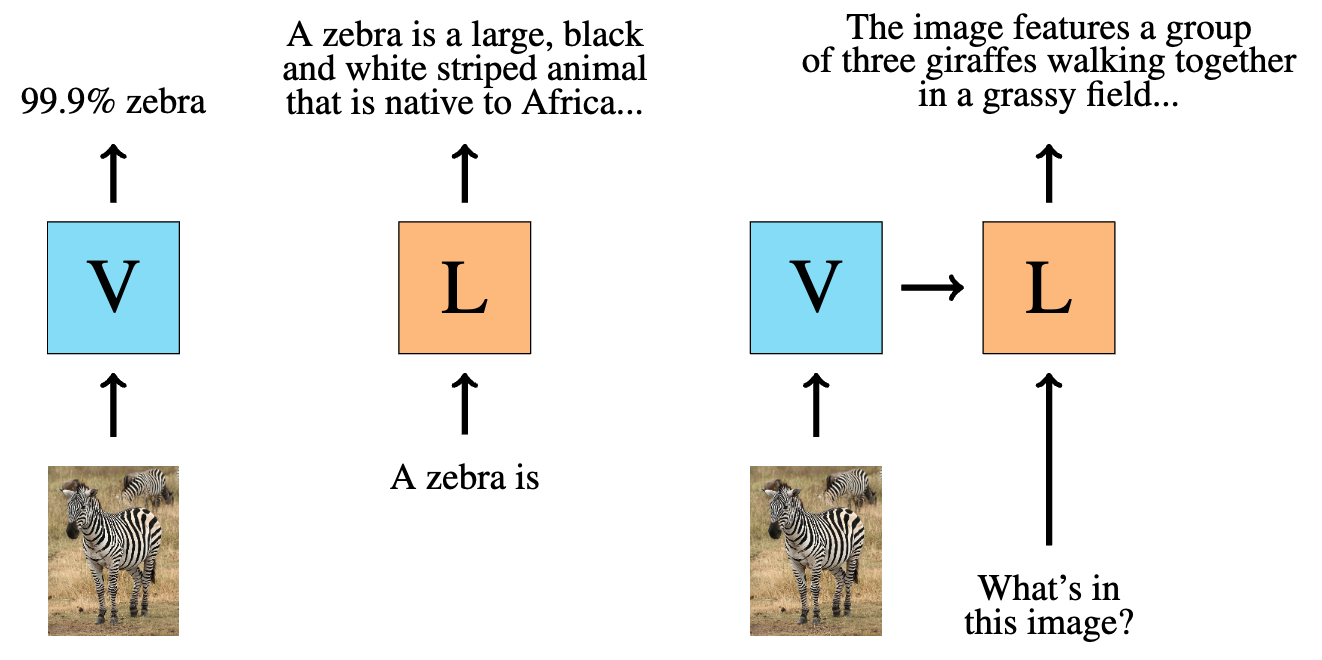

Misalignment Between Vision-Language Representations in Vision-Language Models

Yonatan Gideoni, , Tim G. J. Rudner, Yarin Gal

UniReps + CogInterp Workshops @ NeurIPS 2025

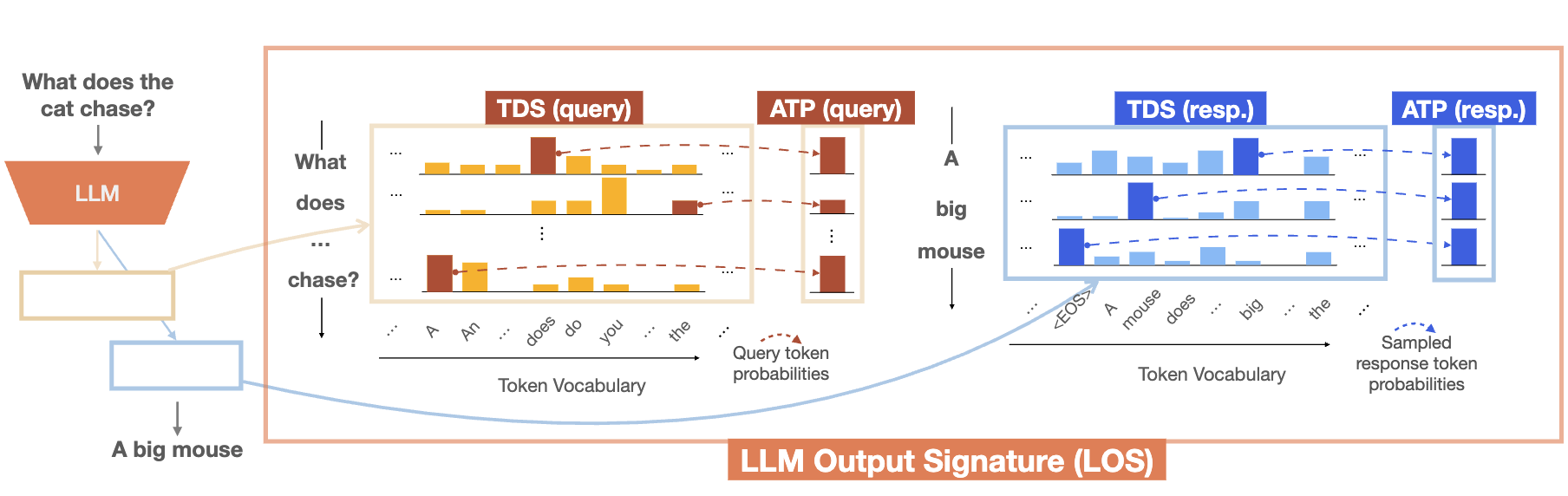

Beyond Next Token Probabilities: Learnable, Fast Detection of Hallucinations and Data Contamination on LLM Output Distributions

Fabrizio Frasca*, Guy Bar-Shalom*, Derek Lim, , Yftah Ziser, Ran El-Yaniv, Gal Chechik, Haggai Maron

AAAI 2026

R2-FM Workshop @ ICML 2025

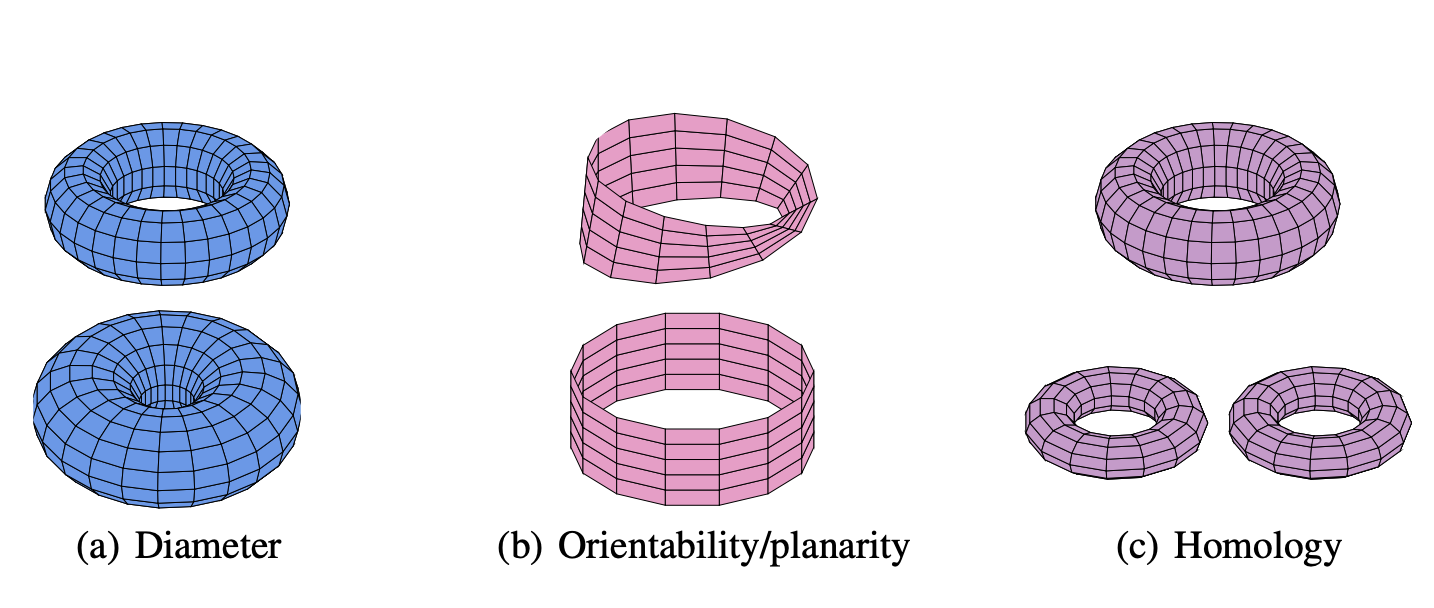

Topological Blindspots: Understanding and Extending Topological Deep Learning Through the Lens of Expressivity

Yam Eitan*, *, Guy Bar-Shalom, Fabrizio Frasca, Michael Bronstein, Haggai Maron

ICLR 2025, Oral Presentation 🎤

Variational Inference Failures Under Model Symmetries: Permutation Invariant Posteriors for Bayesian Neural Networks

, Tycho van der Ouderaa, Mark van der Wilk, Yarin Gal

PMLR, GRaM Workshop @ ICML 2024, Best Paper Award 🏆